Assignment: GPU

- Introduction

- Part 1: Vector Max (required for checkpoint)

- Part 2: Optimization Problem

- Hints

This original version of this assignment was written by Jack Wadden, based off an assignment by Michael Boyer and Kevin Skadron.

Introduction

For this assignment, we will be using NVIDIA’s CUDA, which allows programmers to use a C-like language to write programs for execution on a GPU.

Your tasks will be to:

- (part 1, for checkpoint) Take a simple, buggy CUDA program we supply and modify it to fix the bugs and improve its performance; and

- (part 2, for checkpoint) Parallelize another problem using CUDA.

Useful References

- For CUDA programming:

- For accessing the department SLURM cluster:

Also, please feel free to use any online resources to help you finish this assignment, but avoid copying code, ala the Honor Code. The assignment assumes you have basic knowledge of CUDA and understand the vocabulary. Generous Googling is encouraged and expected.

Part 1: Vector Max (required for checkpoint)

Setup

Our Starter Program

We have provided you with an example CUDA program that finds the largest single-precision float value within a C float array–dubbed vectorMax. This program finds the maximum on the host CPU and on the device GPU and then compares the two results to check that the GPU computed the vectorMax correctly. It also reports a few different performance statistics for you.

This program has two problems:

- It produces the wrong answer on a large vector; and

- It takes advantage of little of the available parallelism on the GPU

Your task will be to fix these problems.

Running The Program on the SLURM cluster

CUDA must be run on NVIDIA CUDA capable graphics cards. You are welcome to use your laptop or other CUDA cards for development, but you must compile, and evaluate your final code on the department cluster machines. You may use a set of interactive machines (cuda1.cs, cuda2.cs, and cuda3.cs) via ssh and remote desktop using a CS Unix account, but know that these machines may have contention, crash, and should not be used for evaluation. This assignment tutorial assumes you have access the department cluster. See the department SLURM cluster guide for an introduction to batch cluster computing before continuing. Usually the cluster is used by logging into the department’s frontend head nodes power1-6.cs.virginia.edu and then submitting jobs.

Below we will provide instructionst that assume you login to one of the development nodes and submit to the GPU worker queue.

-

Login to one of the SLURM cluster head nodes:

$ ssh

@power1.cs.virginia.edu -

Clone or download the example code from github, cd to the main program directory and run ‘make’ to compile it.

$ git clone https://github.com/woggle/GPU-Programming-Assignment.git $ cd GPU-Programming-Assignment/ $ make

This compiles the code and create an executable

vector_max. -

To run this, you will need to use a machine with a CUDA-capable GPU. The frontend head nodes do not have one.

slurm.shis the script we have supplied to submit to the department GPU SLURM queue. If you examine slurm.sh and you will see the following line:./vector_max 256 -k 1

This is the command that will be run on a GPU-capable machine on the cluster.

The first argument (

256) is the size (numer of elements) of a vector to use.The other arguments (

-k 1) is the version of the kernel to use. We have only implemented version 1, but when you modify this code, you will implement new versions as described below. -

Run this on a GPU-capable machines using the command:

$ srun –gres=gpu slurm.sh

-

If you’re waiting for

srun, you can query the status of the queue withsinfoorsqueue. -

The program should work correctly and report timing information.

Run with a Larger Vector Size

- Modify the

slurm.shto supply as a size of512instead of256. You will notice that we get the wrong result.

What the Supplied Code Does

The supplied kernel is launched mapping an a thread-block of 256 threads to 256 locations in the input vector. The kernel then asks a single thread within the thread block (the one with threadId.x == 0) to iterate over the 256 values to find the maximum. Once found, this thread writes the maximum value to the output vector at index block_id. This ensures that all blocks will find the maximum value within the 256 values they are mapped to, storing that value to a unique location in the output vector. The end result is a vector with the maximum value calculated by each block stored at the index of the block’s ID.

This is unfortunately NOT what we want. If the vector is only of length 256, we are guaranteed that a single block will be launched to the GPU, and the vector’s maximum value will be stored in location 0 of the result vector. HOWEVER, if we want to find the maximum of a vector of length 512, two blocks will be launched, and they will both report their respective max values at locations 0 and 1 in the result vector. If the true maximum is in the second half of our vector, it will end up in location 1, not 0, and the program will report an error.

Your Tasks

Problem 1: Handle vectors of arbitrary* length

The kernel behaves correctly (albeit inefficiently, because 255 threads aren’t doing any work!) but the host code is written such that it can only correctly handle vectors of size 256! Change only the host (CPU) code so that it iteratively launches kernels to find the maximum value of arbitrary length vectors. This should involve some sort of loop wrapping the kernel switch and case statements.

Make sure it can run for very large (10 million element) vectors. Because GPU memory is limited, do not worry about vectors larger than 16,000,000.

Your solution must:

- perform essentially all the maximum computation on the GPU and not the CPU. In particular, you could should transfer values to GPU memory once, then transfer a single result from GPU memory once.

- for an input size of 10 million elements, must make not more than 10 GPU kernel calls.

(HINT: if every block calculates a local maximum, what is the new problem size/vector length for the next kernel launch? Do not worry about the inefficiencies of multiple memory transfers, but consider how you could reduce/eliminate the need for them.)

Problem 2: Use shared memory

The latency of an access to “shared memory” (shared without a thread block) is orders of magnitude less than an access to main memory.

-

Create a new version of the kernel called

vector_max_kernel2. Add aswitchstatement around the calls to the original kernel to use this new version of the kernel if-k 2is supplied as an argument tovector_max. -

Modify this kernel to allocate shared memory for each thread block to hold the values corresponding to each thread. You will probably use the

__shared__keyword (described in the CUDA documentation) to do this. -

Have each thread load its corresponding value into the appropriate entry of the shared memory block in parallel.

-

Have the “lead” (

threadId.x == 0) thread find the maximum by reading the values from this shared memory bock. Since the GPU has relaxed consistency, you will need synchronization (e.g. fences or barriers) to ensure that the values are read after they are written.

Hint: You should not need to modify the host code (except to call vector_max_kernel2 where vector_max_kernel would previously be called).

Problem 3: Use a binary reduction

Currently, each thread block spends 255 iterations to find the maximum. If we use a binary reduction instead, then we can perform only 8 iterations.

-

Create a new version of the kernel called

vector_max_kernel3. -

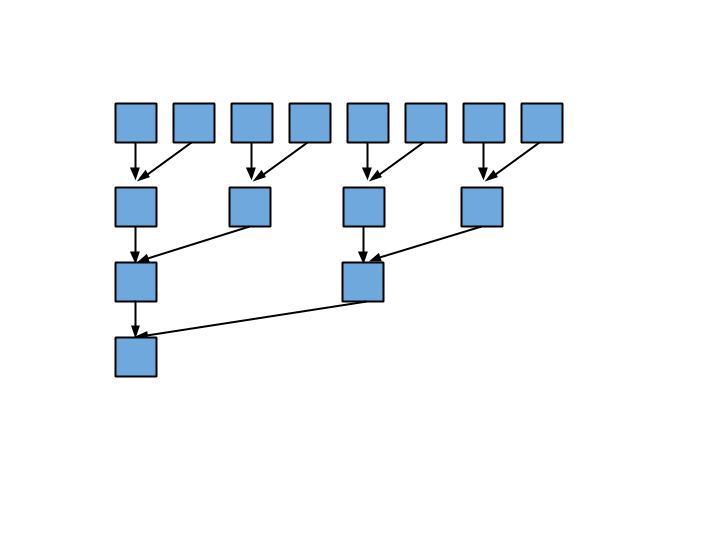

Implement a binary reduction following this pattern (time goes from top to bottom):

Problem 4: Reduce warp divergence.

Because 32 adjancent threads execute a single instruction stream (“warp”), it is better if all threads in a warp do the same thing, making the same control flow decisions.

The reduction pictured above guarantees that threads that “do the same thing” will be as far apart as possible! We would rather group “useful” threads (those doing work) and “useless” threads (those not doing work) into entire warps if possible, so that they share the same control flow.

- Create a new kernel

vector_max_kernel4so that instead of an interleaved reduction, useful threads are always adjacent. (If your solution to problem 3 instead made useful threads adjacent, modify your solution to use the interleaved solution for comparison,

Testing and Submission

-

Test your code with a variety of sizes. Some examples of common bugs:

-

Not handling vector sizes which are not multiples of 256 correctly.

-

Finding the wrong maximum where the maximum element is near the beginning or end of the vector.

-

-

Fill in the

X.XXXs with appropriate performance measurements in a file and submit the result as a file calledanswers-part1.txt:With an input size of 16 000 000, the average runtime of: * kernel 1 was: X.XXX seconds +/- X.XXX seconds * kernel 2 was: X.XXX seconds +/- X.XXX seconds * kernel 3 was: X.XXX seconds +/- X.XXX seconds * kernel 4 was: X.XXX seconds +/- X.XXX secondsFor the +/- values, report the 95th percent confidence interval from at least 5 measurements.

Report the amount of time spentin the GPU kernel, not the CPU overhead.

In our solution

- GPU kernel invocations for kernel 2 take around 90% the time of kernel 1

- kernel 3 is around 60% the time of kernel 2

- kernel 4 is around 50% the time of kernel 3 You should expect to get similar ratios.

-

Submit

answers-part1.txt andvector_max.cu`.

Part 2: Optimization Problem

Pick an algorithm from the list below and implement a parallel version in CUDA, starting with a serial implementation we provide.

- Breadth-first search

- 2D convolution

Your implementation should try to meet the following performance targets:

- Breadth-first search: at least 10 times faster than a naive, serial CPU implementations on one of the graphs we supply;

- 2D convolution: at least 10000 times faster than a naive, serial CPU implementation on a sufficiently large input size.

Alternately, you may choose your own problem with instructor approval.

Questions

Answering the following in a file called answers-part2.txt:

-

Why was (or was not) this problem a good choice for implementation on the GPU?

-

How did you map this problem to the CUDA model? What does a thread do in your implementation? What does a thread block do? What does each GPU kernel call do?

-

For each of the following, indicate whether it is an issue with your algorithm and explain why. Make sure you identify if your code does anything extra to minimize these potential performance issues.

- warp divergence

- synchronization within kernel calls

- synchronization by making separate kernel calls

- frequent data transfers between the GPU and CPU

Submission

Submit your implementation and answers-part2.txt.

Hints

- I’m getting the following error when trying to submit the slurm script:

slurmstepd: error: execve(): slurm.sh: Permission denied

srun: error: artemis2: task 0: Exited with exit code 13 You need to chmod +x the slurm.sh script so SLURM can run it! - I’m getting an error message like the following “FATAL: Module nvidia not found” This means that the driver in your CUDA machine has crashed and that the machine needs to be rebooted or the driver needs to be re-installed. Please send a friendly e-mail to root@cs.virginia.edu politely asking them to make sure that the CUDA machine you were working on has the driver installed correctly.

- My code won’t run when the input size gets really large! This is fine. The total size is limited by the global memory size on the GPU. For evaluation purposes, please use a vector size of 16,000,000 (though I may use different sizes for correctness testing). I was able to run ./vector_max 16,000,000 on a GTX570 graphics card. Anything above this will be limited by the number of threads that can be launched onto a GPU (more precisely 65536 * 256).