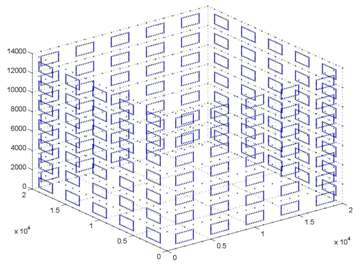

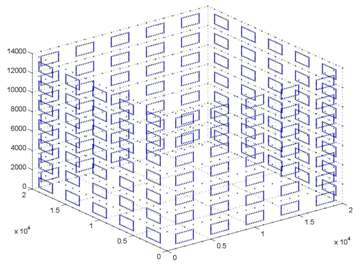

is highly compressible,

|

|

Actual buildings (above) whose CAD model (below) is highly compressible, |

|

The people involved in this research are professors Gabriel Robins (PI) and abhi Shelat (co-PI), and graduate students Nathan Brunelle, Michael Skalak, and Robbie Hott.

To address this growing disconnect, this project is developing a general framework for compression-aware algorithms that operate directly on compressed massive datasets. Each algorithm's speed and memory space performance can dramatically improve with the input's compressibility (i.e., descriptive complexity), rather than depend solely on the input size. This improvement derives from leveraging highly repetitive or parametrically specified input structures, leading to much smaller inputs (and outputs), and enabling algorithms to manipulate very large composite objects while interacting only with their succinct descriptions. We are devising compression-aware algorithms in several classical domains, including for geometric, graph-based, and numerical applications such as sorting and searching.

This project also investigates algorithmically-aware compression schemes specifically designed to allow efficient computations directly on the compressed data. We are also devising new compression-aware data structures that can efficiently handle and store compressed inputs, while still allowing standard queries and access to that data. Our algorithmic contributions will be validated with experiments on both real and synthetic massive data sets. This principled compression-aware algorithms methodology has wide applicability across numerous diverse areas, including networks, bioinformatics, databases, computer graphics, artificial intelligence, geographic information systems, integrated circuit design, and computer-aided engineering.

The intellectual merit of this proposal stems from its broad conceptualization of new families of compression-aware algorithms that will operate directly on massive compressed datasets, coupled with a focus on new applications and practical considerations. This project will design more sophisticated and efficient algorithms, and will enable the processing and mining of very large datasets in numerous areas across academia, industry and government. This project is also training students, conducting outreach, and helping to produce key curricula components in the area of data mining, compression-aware algorithms, optimization, and approximation, and operations research.

The broader impact of the proposed research lies in increasing the efficacy and utility of future compression-aware algorithms and approximation schemes across a wide spectrum of fields, with clear benefits to science, society, and the economy. Data mining and computational sciences have attracted much attention in recent years, but runaway data volumes have swamped existing algorithms and stifled progress. The formulations, algorithms, codes, and theories being developed by this project will help usher in future efficient and practical algorithms and heuristics, and fundamentally change the way in which organizations generate, collect, process, utilize, and mine large datasets.

Some of our findings thus far are summarized in the following draft:

These preliminary results are very encouraging, and demonstrate that this general research area of compression-aware algorithms is ripe with new and useful results that await discovery. We are currently developing additonal compression-aware algorithms for other problem areas, and implementing and benchmarking our new algorithms. We will soon make our results, implementations, and benchmarks available to the larger research community.