1 Assignment Metadata

You need to write every method you submit yourself. You cannot use other people’s code or find the method in an existing library. For example, you should not use Java’s Graphics class, PIL’s ImageDraw module, the CImg draw_* methods, the Bitmap Draw* methods, etc.

You may use external vector product and and matrix multiplication code if you wish. However, the learning these libraries often requires as much time as it would take to write them yourself.

mult4by4(a, b):

matrix answer = 4 by 4 matrix

for(outrow from 1 to 4):

for(outcol from 1 to 4):

answer[outrow,outcol] = 0

for(i from 1 to 4):

answer[outrow,outcol] += a[outrow, i] * b[i, outcol]

return answer

multMbyV(mat, vec):

vector answer = copy of vec

for(outrow from 1 to 4):

answer[outrow] = 0

for(i from 1 to 4):

answer[outrow] += mat[outrow, i] * vec[i]

return answerYou are welcome to seek conceptual help from any source, but cite it if you get it from something other than a TA, the instructor, or class-assigned readings. You may only get coding help from the instructor or TAs.

2 Overview

You’ll need to keep track of the current set of transformations. Model/View transformations are applied first, in reverse order; then perspective is applied. Thus, in the file snippet

rotate 90 1 0 0

frustum -1 1 -1 1 1 10

translate -3 2 1

trif 1 2 3the triangle’s points are first translated, then rotated, then the frustum is applied.

Note, however, that order of operations can be seen in two ways. Rotating the camera 45° clockwise and then moving it 2 units to the right is the same operation as moving the world 2 units to the left and then rotating it 45° counterclockwise. Depending on how you think of the transformations, they can appear to be happening first-to-last or last-to-first.

Some Model/View commands replace the current Model/View; this means later drawing commands ignore any Model/View commands that came before the replacing command.

The entire flow looks like this (required parts are in bold):

- When a color, normal, or texture coordinate is specified, remember it as the

current

value of that type. - When a point is specified, store it in a list as with HW1. We’ll index that list in the say way we did for that HW.

- for the required part, just store the (x, y, z, w)

- some elective parts will also require storing the current normal, color, and/or texture coordinate with the point

- When a drawing command is specified,

- It it involves creating several triangles, do that and send the triangles through the next steps

- Apply Model/View transformations (rotations, scaling, translations, matrices, etc), if any, to each vertex.

- Clip planes happen here.

- Apply the current projection matrix, if any, to each vertex.

- Frustum clipping happens here in OpenGl, but we won’t add it.

- Divide each x, y, and z by w.

- Apply a viewport transformation so that (-1, -1) is placed in the top left corner and (1, 1) in the bottom right corner of the image.

- Rasterize the triangle into fragments, interpolating a z value (and other values as extras require) for each pixel. Only continue with those pixels that are on the screen (i.e., 0 ≤ x < w and 0 ≤ y < h) and have z between 0 and 1.

- Check each pixel’s z against a depth buffer. Keep only those less than the buffer’s current value.

- Texture and lighting happens here to find colors for each fragment. It can also happen before the depth buffer check if you prefer.

- Set the pixel and depth buffer values

3 Assistance, Citation, and Cheating

Code for most of these algorithms is all over the Internet and you probably have half a dozen version already on your machine. This makes matters of plagarism, etc, a bit tricky.

| Action | Response |

|---|---|

| Using code from readings or lecture | OK |

| Using code from TA or instructor | OK, but cite |

| Using ideas from student, tutor, or website | OK, but cite |

| Using code from student, tutor, website, or program | Cheating |

| Using a drawing library instead of setting pixels manually | Cheating |

4 Required Features

The required part is worth 50%

- png width height filename

- same syntax and semantics as HW0 and HW1. This also specifies the viewport transformation, as used in step 3.7. of the above outline. In the viewport step you will need to do something like

x += 1; x *= width/2;and likewise fory;zandware not changed. - xyz x y z

- Add the point (x, y, z, 1) to the vertex list.

- trif i_1 i_2 i_3

Draw the given triangle, filled in

Draw the given triangle, filled in flat

in the current color (as given by the lastcolorcommand, or white if none were given). Apply any transformations and perspective that might exist as outlined in the overview.- color r g b

Defines the current color to be r g b, specified as floating-point values; (0, 0, 0) is black, (1, 1, 1) is white, (1, 0.5, 0) is orange, etc. You only need to track one color at a time.

Defines the current color to be r g b, specified as floating-point values; (0, 0, 0) is black, (1, 1, 1) is white, (1, 0.5, 0) is orange, etc. You only need to track one color at a time.You’ll probably need to map colors to bytes to set the image. All colors ≤ 0.0 should map to 0, all colors ≥ 1 should map to 255; map other numbers linearly (the exact rounding used is not important).

If you do lighting, you’ll need to store light values > 1 and not clamp to 1 until after lighting. If you do not do lighting, you can clamp colors when you read them.

- loadmv a_{1,1} a_{1,2} a_{1,3} a_{1,4} a_{2,1} a_{2,2} … a_{4,4}

Load the given model/view matrix (specified in row-major order). This replaces any existing model/view matrix.

Load the given model/view matrix (specified in row-major order). This replaces any existing model/view matrix.

- loadp a_{1,1} a_{1,2} a_{1,3} a_{1,4} a_{2,1} a_{2,2} … a_{4,4}

Load the given projection matrix (specified in row-major order). This replaces any existing projection matrix.

Load the given projection matrix (specified in row-major order). This replaces any existing projection matrix.

- Per-pixel clipping

Only draw pixels that lie on the screen with a z between 0 and 1.

Only draw pixels that lie on the screen with a z between 0 and 1.

- Depth buffer

Keep a depth value for each pixel, initially 1 for all pixels. Only draw a pixel if its z is less than or equal to the z in the depth buffer. If you draw a pixel, update the depth buffer with it’s z.

Keep a depth value for each pixel, initially 1 for all pixels. Only draw a pixel if its z is less than or equal to the z in the depth buffer. If you draw a pixel, update the depth buffer with it’s z.

5 Optional Features

5.1 Perspective (0–25)

- xyzw x y z w (5 points)

Add the point (x, y, z, w) to the vertex list. Divide by w after multiplying by all matrices but before scan converting.

Add the point (x, y, z, w) to the vertex list. Divide by w after multiplying by all matrices but before scan converting.

- frustum l r b t n f (5 points)

Set the projection to be a perspective projection. The near rectangle of the frustum is (l, b, -n) (l, t, -n), (r, b, -n), (r, t, -n); the point of the pyramid the frustum is made of is at (0, 0, 0); the far plane is at z = -f. This parallels the glFrustum command and the matrix documented by OpenGL should work. This replaces the existing projection transformation.

Set the projection to be a perspective projection. The near rectangle of the frustum is (l, b, -n) (l, t, -n), (r, b, -n), (r, t, -n); the point of the pyramid the frustum is made of is at (0, 0, 0); the far plane is at z = -f. This parallels the glFrustum command and the matrix documented by OpenGL should work. This replaces the existing projection transformation.

- ortho l r b t n f (5 points)

Set the projection to be an orthogonal projection. The box that can be seen is [l, r] in x; [b, t] in y; and [-f, -n] in z. This is similar to the glOrtho command; however, that command sets the near z to −1, not 0. If you use nearVal = 2n - f then the matrix documented by OpenGL should work. This replaces the existing projection transformation.

Set the projection to be an orthogonal projection. The box that can be seen is [l, r] in x; [b, t] in y; and [-f, -n] in z. This is similar to the glOrtho command; however, that command sets the near z to −1, not 0. If you use nearVal = 2n - f then the matrix documented by OpenGL should work. This replaces the existing projection transformation.

- perspective-correct interpolation (0–10 points)

Linear interpolation isn’t quite right for non-coordinate values. When interpolating some value Q while making pixels, instead interpolate {Q \over w}; also interpolate {1 \over w} and use {Q \over w} \div {1 \over w} as the Q value for each fragment.

Linear interpolation isn’t quite right for non-coordinate values. When interpolating some value Q while making pixels, instead interpolate {Q \over w}; also interpolate {1 \over w} and use {Q \over w} \div {1 \over w} as the Q value for each fragment.You get 10 points for perspective-correct interpolation only if you also do either

trigortexture. It applies to normals and lighting too, but is generally less obvious in its results.

5.2 Matrix Manipulation (0–50)

- translate d_x d_y d_z (5 points)

Add a translation to the Model/View transformations.

Add a translation to the Model/View transformations.

- rotatex degrees, rotatey degrees, and rotatez degrees (5 points)

Add a counter-clockwise rotation around the given axis to the Model/View transformations.

Add a counter-clockwise rotation around the given axis to the Model/View transformations.

- rotate degrees axisx axisy axisz (10 points)

Add a counter-clockwise rotation of the given number of degrees around the given axis. This is what glRotate does, and documentation of that function might help.

Add a counter-clockwise rotation of the given number of degrees around the given axis. This is what glRotate does, and documentation of that function might help.

- scale s_x s_y s_z (5 points)

Add a scale to the Model/View transformations.

Add a scale to the Model/View transformations.

- lookat eye center up_x up_y up_z (10 points)

Replace the Model/View with a look-at transformation. Look from the vertex given by index eye toward the vertex given by index center such that the up orientation is as similar to (up_x, up_y, up_z) as the eye and center allow. This parallels the gluLookAt command and the matrix documented by OpenGL should work.

Replace the Model/View with a look-at transformation. Look from the vertex given by index eye toward the vertex given by index center such that the up orientation is as similar to (up_x, up_y, up_z) as the eye and center allow. This parallels the gluLookAt command and the matrix documented by OpenGL should work.

- multmv a_{1,1} a_{1,2} a_{1,3} a_{1,4} a_{2,1} a_{2,2} … a_{4,4} (5 points)

Add the given model/view matrix (specified in row-major order) to the existing model/view transformation. Unlike

Add the given model/view matrix (specified in row-major order) to the existing model/view transformation. Unlike loadmv, this does not replace what was already there.- Normal Transformation (0–10 points; requires lighting and transformation matrices)

Vectors (sunlight, normal, clipplane) ought to be multiplied by a variant of the model/view matrix. If point p is modified by multiplication M \vec{p} then vector \vec{v} is modified by multiplication \vec{v} M^{-1}. However, you do not need to compute the inverse: this is the same as saying that

Vectors (sunlight, normal, clipplane) ought to be multiplied by a variant of the model/view matrix. If point p is modified by multiplication M \vec{p} then vector \vec{v} is modified by multiplication \vec{v} M^{-1}. However, you do not need to compute the inverse: this is the same as saying that- rotations (including

lookat) apply normally; - translations are ignored; and

- scaling is applied in inverse (i.e., ({1 \over sx}, {1 \over sy}, {1 \over sz}).

You do not need to handle

loadmvormultmv.Sunlights should be modified when specified. Normals should be modified during the

trig,trif, etc, command. Be sure to re-normalize the normals after transformation.- rotations (including

5.3 Scan Converting (0–20)

- trig i_1 i_2 i_3 (10 points)

Change

Change xyzto store the current color (as defined by the most recentcolorcommand, or white if there was no such command) with each vertex it defines. Draw a gouraud-shaded triangle using those vertex colors.- Efficient screen clipping (10 points)

When scan-converting triangles, have the first step on both edges and scanlines move not just to an integer, but to an integer inside the bounds of the screen; and stop when you leave the bounds of the screen.

When scan-converting triangles, have the first step on both edges and scanlines move not just to an integer, but to an integer inside the bounds of the screen; and stop when you leave the bounds of the screen.This should allow you to fill gargantuan triangles quickly.

- Interplating more values

- Some of the vertex shader methods will require you to interpolate additional values during scan converting, such as texture coordinates or surface normals. There are not separate points for this, but it is worth designing your scan converting to allow this.

5.4 Geometry modification (0–60)

- clipplane p_1 p_2 p_3 p_4 (15 points)

Clip triangles before drawing them. Clipping a triangle along one plane might turn it into two triangles (by clipping off a corner).

Clip triangles before drawing them. Clipping a triangle along one plane might turn it into two triangles (by clipping off a corner).Points that satisfy (p_1, p_2, p_3, p_4) \cdot (x, y, z, w) >= 0 should be kept. Apply this to the vertices of a triangle after the Model/View transformations and before the Projection transformations.

- cull (5 points)

If you have seen a

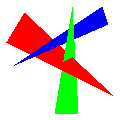

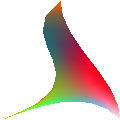

If you have seen a cullcommand, only draw triangles if their vertices would appear in counter-clockwise order on the screen. Otherwise simply ignore them.- rectbezf subd i_{1} i_{2} i_{3} … i_{16} (10 points)

Subdivide the given cubic Bezier patch into 2^{subd} by 2^{subd} quads and then draw each quad as two triangles as if using

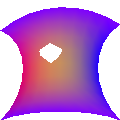

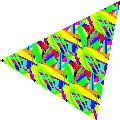

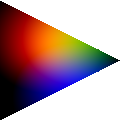

Subdivide the given cubic Bezier patch into 2^{subd} by 2^{subd} quads and then draw each quad as two triangles as if using trif. subd will always be an integer greater than zero.Bezier patch subdivision is done much like curve subdivision: the point at (s, t) is found by finding the (s, t) point on each quad, using those points to make a lower-order bezier patch, and iterating down to just a single point. Thus, in the illustration the 16 input points define 9 quads (black); The first interpolation defines 4 (red); then 1 (blue); and finally a single point on the surface (green). The normal at that point is the surface normal of the blue quad (i.e., the cross-product of the two diagonals of that quad), although the normal is not needed for flat shading.

- rectbezg (5 points)

like

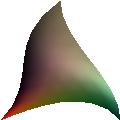

like rectbezfexcept uses color interpolation instead of flat color.- tribezf subd i_1 i_2 i_3 … i_{10} (10 points)

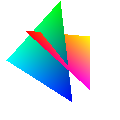

Subdivide the given cubic Bezier patch to have 2^{subd} triangles on each side, then draw each quad as if using

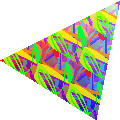

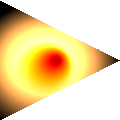

Subdivide the given cubic Bezier patch to have 2^{subd} triangles on each side, then draw each quad as if using trif. subd will always be an integer greater than zero.Bezier patch subdivision is done much like curve subdivision: the point at (s, t) is found by finding the (s,t) point on each triangle, using those points to make a lower-order bezier patch, and iterating down to just a single point. Thus, in the illustration the 10 input points define 6 triangles (black); The first interpolation defines 3 (red); then 1 (blue); and finally a single point on the surface (green). The normal at that point is the surface normal of the blue triangle, although the normal is not needed for flat shading.

- tribezg (5 points)

like

like rectbezfexcept uses color interpolation instead of flat color.- Bezier normals (10 points; requires lighting)

When lighting a Bezier patch in Gouraud or Phong style, use the normals defined by the interpolated shape.

When lighting a Bezier patch in Gouraud or Phong style, use the normals defined by the interpolated shape.

5.5 Fragment Shaders (0–120)

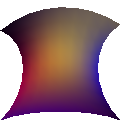

- Textures (30 points)

This is a combination of three commands:

- texcoord s t

- adds a texture coordinate to all subsequent vertices (similar to the way

colordoes withtrig). Iftexcoordhas not occurred prior to an vertex being specified, use (0, 0) for that vertex’s texture coordinate. - texture filename.png

adds a texture image to be used in subsequent drawing commands.

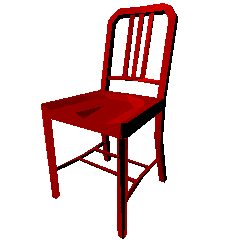

The example images on this page use splat2.png as the texture image. We will test your program with other images and other image sizes too. Notably, any of the images created by running your program should work too.

- trit i_1 i_2 i_3

draw a texture-mapped triangle. Interpolate the (s, t) texture coordinates to each fragment; the color of each fragment is then the color from the texture at the texel closest to (s w, t h) where the texture is w \times h texels in size.

draw a texture-mapped triangle. Interpolate the (s, t) texture coordinates to each fragment; the color of each fragment is then the color from the texture at the texel closest to (s w, t h) where the texture is w \times h texels in size.Texture coordinates should wrap; that is, treat -1.3, -0.3, 0.7, 1.7, etc, as all mapping to the same texel.

You may assume that

tritwill never be called withouttexturebeing called earlier in the file.

- decals (10 points; requires textures and

trig)  If the keyword

If the keyword decalshas appeared in the input file, alpha-blend textures on top of the underlying object color (interpolated as withtrig): transparent parts of the texture should show the underlying object color.If

decalshas not appeared in the input file, just use textures’ RGB values, not the underlying object color, ignoring alpha.- Lighting (25 for sun lights; 30 for point lights; 35 if have both)

This is a combination of the

normalcommand and at least one lighting command.- normal x y z

- define a normal, which will be attached to all subsequent vertices and interpolated during scan conversion

- sunlight l_x l_y l_z

Add a sun-like light source (infinitely far away) coming from the given direction. Store a unit-length version of l_x, l_y, and l_z. Use the current color for its light.

Add a sun-like light source (infinitely far away) coming from the given direction. Store a unit-length version of l_x, l_y, and l_z. Use the current color for its light.

- bulb i_1

Add a point light source centered on the vertex given by index i_1 (apply the current model/view matrix but not the current projection). Use the current color for its light.

Add a point light source centered on the vertex given by index i_1 (apply the current model/view matrix but not the current projection). Use the current color for its light.Point lights require you to interpolate the world-coordinate (x, y, z) of each fragment as well as its screen coordinate (x, y, z) and its normal; the world coordinate is needed to compute the correct direction to the light.

Physically accurate point light intensity would fall with the square of the distance; we will not use falloff in this homework.

For each fragment, use Lambert’s law to determine the amount of illumination. Let c by the cosine of the angle between the surface normal and the light direction, \vec{l} be the color of the light, and \vec{o} be the color of the object. The color of the fragment is then c \vec{l} \vec{o} where the vectors are multiplied element-wise (i.e., red times read, green times green, etc.)

The dot product can efficiently compute c provided you normalize vectors. Do not include lights behind the object (which can be detected by c < 0).

- Multiple Lights (10 points; requires lighting)

Allow an arbitrary number of lights, not just one. Sum all of their contributions.

Allow an arbitrary number of lights, not just one. Sum all of their contributions.

- Very bright lights and darkness-lights (10 points; requires bulb lighting and multiple lights)

Allow light colors to exceed (1, 1, 1) to represent very bright lights and to be negative to represent (physically impossible) darkness emitters. Don’t clamp colors until rendering.

Allow light colors to exceed (1, 1, 1) to represent very bright lights and to be negative to represent (physically impossible) darkness emitters. Don’t clamp colors until rendering.

- shininess \alpha (15 points; requires bulb lighting and multiple lights)

Add a specular component to lighting triangles. Use the Blinn-Phong model: the specular intensity is (\vec{H} \cdot \vec{n})^{\alpha} where \vec{n} is the surface normal and \vec{H} is the normalized halfway vector between the direction to the light and the direction to the eye. Because the Model/View always moves the eye to the origin, the direction to the eye is simply the negative of the location of the point being lit.

Add a specular component to lighting triangles. Use the Blinn-Phong model: the specular intensity is (\vec{H} \cdot \vec{n})^{\alpha} where \vec{n} is the surface normal and \vec{H} is the normalized halfway vector between the direction to the light and the direction to the eye. Because the Model/View always moves the eye to the origin, the direction to the eye is simply the negative of the location of the point being lit.The color added by specularity is the specular intensity times the color of the light. The color of the object is not used.

Only act based on the most recent

shininesscommand. If the shininess is ≤ 0, do not add specularity.- flatnormals (10 points; requires lighting)

if the keyword

if the keyword flatnormalshas appeared in the input file, ignore per-vertexnormals fortrifcommands and instead use the perpendicular vector of the triangle. This can be found as (\vec{p_2}-\vec{p_1})\times(\vec{p_3}-\vec{p_1}).