Projects

- An Explainable Machine Learning Platform for Single Cell Data Analysis

- Collaborative Research: Co-designing Hardware, Software, and Algorithms to Enable Extreme-Scale Machine Learning Systems

- A Scalable Hardware and Software Environment Enabling Secure Multi-party Learning

- Knowledge-Guided Meta Learning for Multi-Omics Survival Analysis

- Collaborative Research: Mining and Leveraging & Knowledge Hypercubes for Complex Applications

- [Completed] Collaborative Research: Knowledge Guided Machine Learning: A Framework for Accelerating Scientific Discovery

- Multimodal Machine Learning for Data with Incomplete Modalities

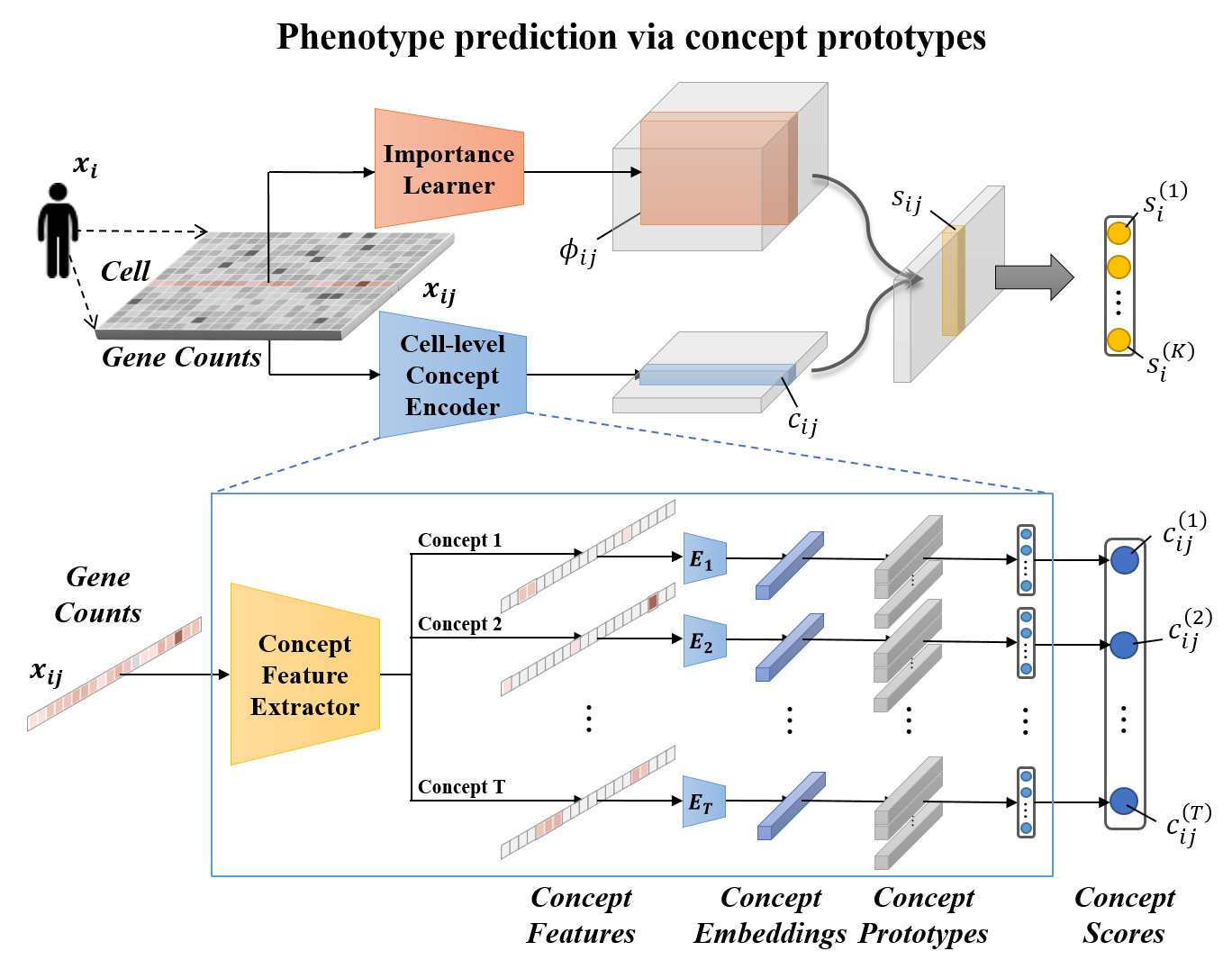

An Explainable Machine Learning Platform for Single Cell Data Analysis

This proposal aims to develop a platform for explainable machine learning that predicts phenotype while explaining the associated genes and pathways in specific cell types.

Collaborative Research: Co-designing Hardware, Software, and Algorithms to Enable Extreme-Scale Machine Learning Systems

The newly emerging Artificial Intelligence (AI) of Things (AIoT) and Internet of Senses (IoS) systems will make mobile and embedded devices smart, communicative, and powerful by processing data and making intelligent decisions through the integration of the Internet of Things (IoT) and Artificial Intelligence (AI). This project aims to provide a new generation of systems, algorithms, and tools to facilitate such deep integration at extreme scale.

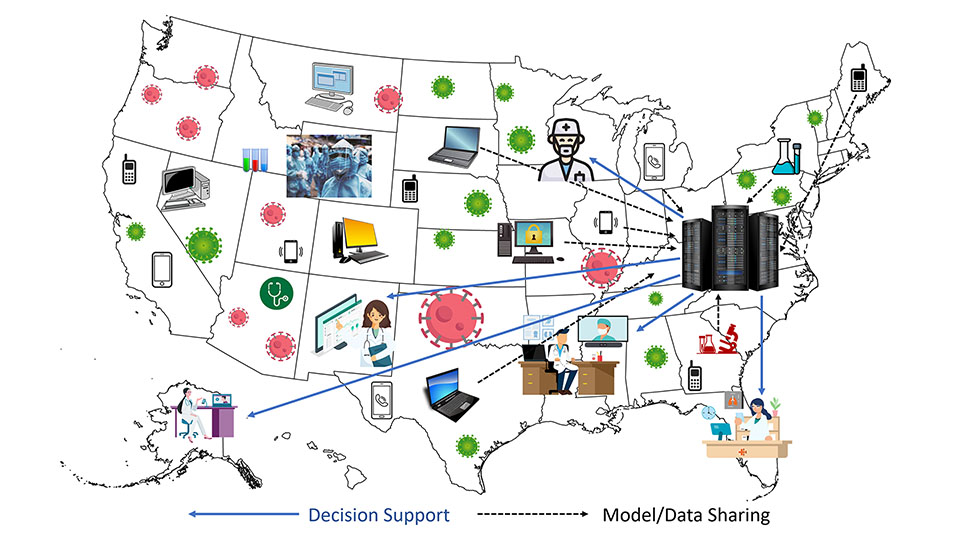

A Scalable Hardware and Software Environment Enabling Secure Multi-party Learning

A new era of collaborative learning is emerging as part of the next phase of ubiquitous computing, wherein researchers at different sites will work together to correlate the disparate data they have separately acquired. It is thus imperative to establish a platform to support collaborative, multi-party data analysis, through which the participating parties can share their data with each other with different degrees of privacy control. To make such an environment available to the community, this project establishes a scalable and trusted hardware and software environment, termed Bridge, to support a general form of collaborative machine learning.

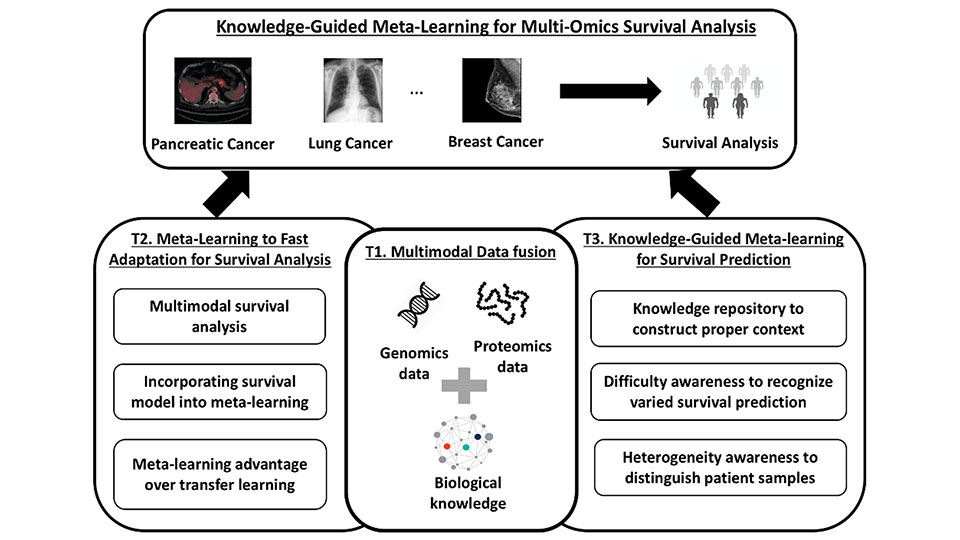

Knowledge-Guided Meta Learning for Multi-Omics Survival Analysis

Survival analysis, which predicts the time to an event (i.e., death in the case of cancer), is of crucial importance for cancer biology research and disease treatment. Recent advances in high-throughput genomics technologies have led to a massive amount of high-dimensional omics data being available. This project develops a new knowledge-guided meta-learning framework which innovatively integrates biological knowledge with meta-learning for multi-omics survival analysis.

Collaborative Research: Mining and Leveraging & Knowledge Hypercubes for Complex Applications

Knowledge repository refers to a machine-readable structure that stores knowledge about various entities (e.g., organizations, events, genes), which facilitates efficient information seeking. In many domains, knowledge varies with respect to contexts--a flat structure that is commonly adopted cannot capture the complicated knowledge associated with different contexts. To make knowledge resources more findable, accessible, interoperable, and reusable (FAIR), this project plans to conceptualize a new structure, Knowledge Hypercube, for organizing and retrieving knowledge that could support complex applications in various domains.

[Completed] Collaborative Research: Knowledge Guided Machine Learning: A Framework for Accelerating Scientific Discovery

The success of machine learning (ML) in many applications where large-scale data is available has led to a growing anticipation of similar accomplishments in scientific disciplines. However, a purely data-driven approach to modeling a physical process can be problematic. This project is developing novel techniques to explore the continuum between knowledge-based and ML models, where both scientific knowledge and data are integrated synergistically.

Multimodal Machine Learning for Data with Incomplete Modalities

With the advances in data collection techniques, large amounts of multimodal data collected from multiple sources are widely available, including text, images, video, and audio. However, effectively integrating and analyzing multimodal data remains a challenging problem, especially when the data is incomplete. This project develops a multimodal machine learning framework that formulates a new fundamental structure to facilitate the complex information extraction and integration from multimodal data with incomplete modalities for classification and prediction.